In our everyday lives, we rely more and more on technology. The world is moving towards automating processes to make people’s lives more comfortable, easier, and safer. With the help of artificial intelligence (AI), the automation progress is moving forward from baby steps to the strides of a grown man. AI use can be found in various domains, such as in language technology, where chatbots are programmed to speak to assist customers, and the mobility industry, where autonomous vehicles are already a reality.

To get the AI working seamlessly and accurately, a lot of hard work and hours are put in. All this work and the mechanisms behind it, however, are not visible for the average user, which may cause misconceptions and concerns about AI. This, in turn, can lead to various issues, such as fear that robots will replace humans (1) and mistrust of AI-based systems (2). That’s why we need to talk more about what’s behind the term AI, how AI-based technology works, and where it can be used.

What is what? AI, machine learning, and neural networks

The terms artificial intelligence, machine learning, and neural networks are often used interchangeably, but they indicate different things. Or, rather, they refer to parts of a whole, for neural networks and machine learning are both parts of artificial intelligence (you can think of it like Matryoska dolls). Let’s take a closer look at the terms and what they mean.

Artificial intelligence. AI is the ability of digital computers or robots to mimic human behavior that calls for such skills as problem-solving and learning. While humans are capable of showing intelligence in different aspects of life, computers can reach an expert level only in performing specific tasks. For this reason, AI as it exists today is known as weak or narrow AI. Although narrow AI can solve complex problems, often more efficiently than humans, its functionality is limited. Examples of narrow AI include digital voice assistants, recommendation and search engines, chatbots, autonomous vehicles, and image and speech recognition.

Machine learning. One subset of an AI is machine learning through which the systems can learn from data, make predictions, and improve themselves without being explicitly programmed. Simply put, the systems “look” at the data, search for patterns in the data and, based on these patterns, make predictions as to what these patterns mean. To be able to detect patterns and make predictions, different algorithms are used.

Machine learning algorithms are commonly categorized as supervised or unsupervised. With supervised algorithms, the data is labeled before learning, and predictions on new data are based on the labeled data. This method is used to find certain patterns from the data so that the system can give the correct output. With unsupervised algorithms, on the other hand, the data is not labeled and the aim of the algorithms is to detect hidden patterns rather than give the correct output. Which method to use depends on the nature of the specific task.

Neural networks. To make the predictions, machine learning uses algorithms. While there are many machine learning algorithms, recent important developments of AI are associated with neural networks. There exist distinct types of neural networks, such as multi-layer perceptrons (MLP), recurrent networks, and convolutional neural networks. (3). However, which type of neural network to use, depends on the aim of the system.

In essence, neural networks imitate the architecture of the human brain, i.e., they consist of so-called neurons and their connections. Let’s explain it in a bit more detailed manner by taking convolutional neural networks that are used in image recognition as an example. These networks mimic how the human visual system works and process the images by a series of filters in convolutional layers to extract the features of increasing level of abstraction. Once a sufficient level of abstraction is achieved, it is possible to recognize objects from the images or even individual pixels belonging to an object.

However, while being an important part of AI, such networks make little sense without a set of proper filter parameters. The number of these parameters runs into millions, and they cannot be programmed manually. In fact, these are optimized during the network training process instead. Furthermore, lots of training data are needed and with the correct answers at first. Here’s why.

Annotating the training data is a must in supervised learning

As we’ve already established, AI uses machine learning, which helps it to learn and make its predictions constantly better and more accurate. However, the accuracy level of the predictions depends on the input data that is used in the learning process. In the case of supervised learning, the input data must be annotated.

The process of annotation, however, is time-consuming since it’s done manually (although, usually, there is some automation that can reduce the annotation workload). While the volume of the data depends on the aim of the AI – why the AI is developed, and what problems it must solve – it has to be big to obtain good results. The more data is available for the training process, the better is the outcome.

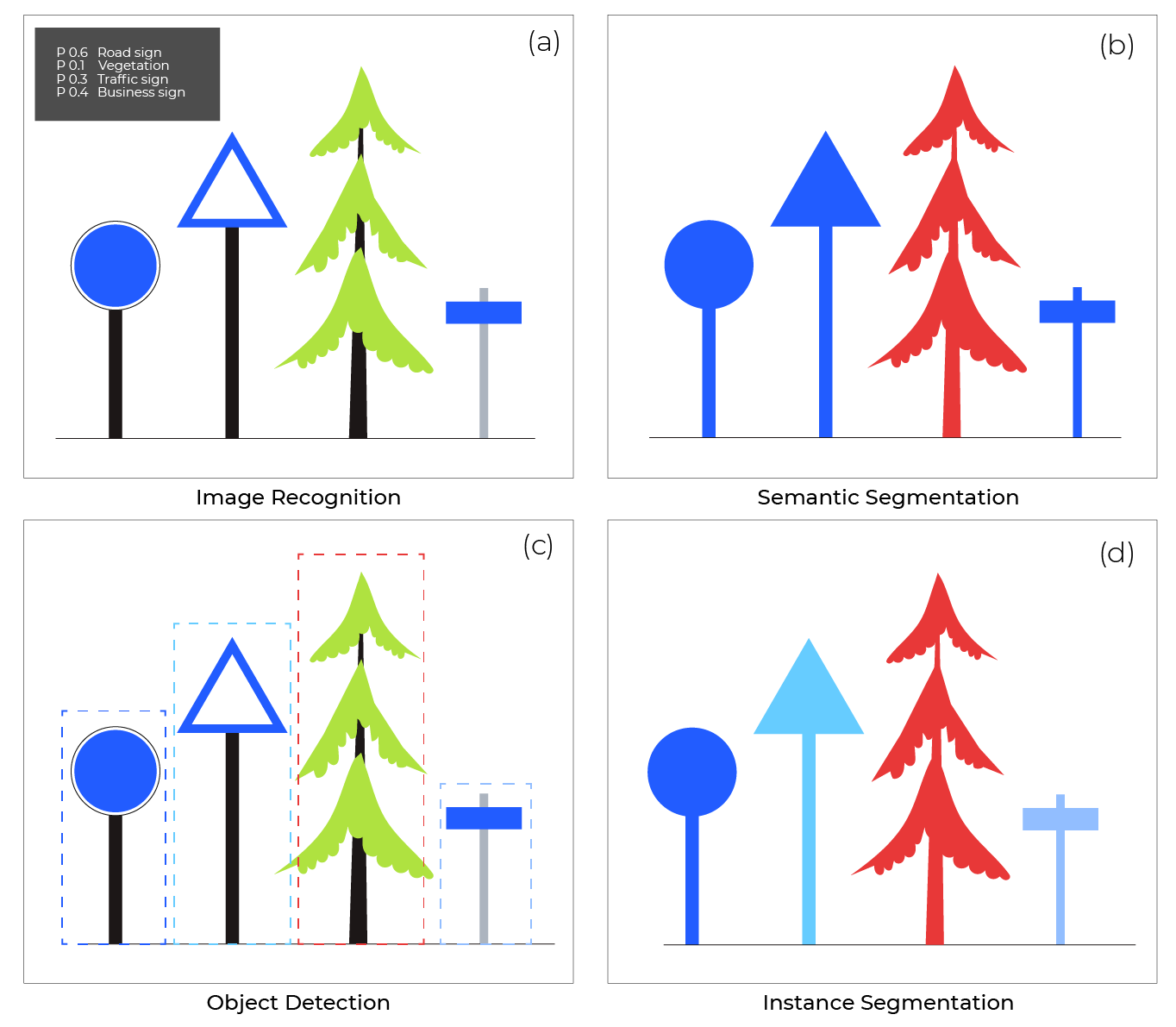

The way the data is annotated depends on the nature of the task that is addressed. Typical computer vision tasks include classification, object detection, and segmentation. In classification, we need only assign class labels of objects depicted in the images (like on panel (a) below). In object detection, we need to provide bounding box coordinates in addition to class labels (on panel (c) below). In segmentation, we need to assign class labels to each individual pixel of the image (panels (b) and (d) below).

Well, how does it all work? Let’s take feature detection from 3D geospatial data as an example. The data consists of visual imagery and point clouds. Annotating features from images can mean drawing a detailed mask or simply drawing a box around a particular feature (e.g., traffic sign). Point clouds are generally classified into different classes, which include ground, posts, buildings, vegetation, and such. Annotating this kind of spatial data is quite tricky. Whether some pixels on an image or points in a point cloud belong to a certain feature or class can be subjective and demands good judgment from annotators.

Thus, AIs need a lot of work and input data before they reach an acceptable accuracy level (i.e., the level of accuracy that human experts can perform). Therefore, developing AI-based technology needs investment in terms of time and money. This investment, however, will pay off.

What good can come from the use of artificial intelligence?

The use of AI is beneficial in different domains and the results of a recent survey by IBM (4) indicate that the use of AI-based technology is on the rise. This is also supported by the AI Dossier report (5), which states that AI will bring value by cost reduction, increased speed to execution, reduced complexity, transformed engagement, fueled innovation, and fortified trust. For example, in business, using AI in predicting the consumer demand and their next actions through analyzing customer data from various sources can help in assortment optimization (6). With the knowledge of customers’ demands and future actions, it’s possible to make better decisions on how to provide customers with what they really need. This will increase customer satisfaction rate and business revenue.

Another good example is the health care domain. Diagnosing a patient can be a complex task since there are a lot of factors at play that may influence their health conditions. For instance, their genetic background, lifestyle, and overall medical history in addition to their current symptoms. With the help of AI, the diagnosis can be made more accurately. By analyzing vast amounts of data from various sources, AI can uncover complex patterns and characteristics of the disease that humans might not even think about (7).

In the geospatial domain, AI has been successfully applied for defect detection in predictive road maintenance, saving time and money, and adding value in terms of data processing (8, 9). Introducing AI also to such activities as predictive road maintenance, traffic management, and safety auditing can bring its results to a whole different level in terms of speed and cost-effectiveness (10). This, in turn, will make traveling more comfortable and safer for everyone.

In the light of this knowledge, we can conclude that the use of AI in various domains has brought positive results and continues to do so. Also, there is no need to worry – the human touch won’t disappear from AI. Humans are the ones who set the aims for the AI, create the data on which it is trained, and supervise the whole training process.

Sources

1. 365DataScience. Debunking 10 Misconceptions About AI [Internet, cited 2021 October 7]. Available from: https://365datascience.com/trending/debunking-misconceptions-ai/

2. Zhang, Z., Citardi, D., Wang, D., Genc, Y., Shan, J., Fan., X. Patients’ perceptions of using artificial intelligence (AI)-based technology to comprehend radiology imaging. Health Informatics Journal. [Internet] 2021 April [cited 2021 October 7]. Available from: https://journals-sagepub-com.ezproxy.utlib.ut.ee/doi/10.1177/14604582211011215

3. IBM. Neural Networks [Internet, cited 2021 October 7]. Available from: https://www.ibm.com/cloud/learn/neural-networks

4. IBM. Global AI Adoption Index 2021 [Internet, cited 2021 October 7]. Available from: https://filecache.mediaroom.com/mr5mr_ibmnewsroom/191468/IBM%27s%20Global%20AI%20Adoption%20Index%202021_Executive-Summary.pdf

5. Deloitte. Deloitte AI Institute Unveils the Artificial Intelligence Dossier, a Compendium of the Top Business Use Cases for AI [Internet]. 2021 August [cited 2021 October 7]. Available from: https://www2.deloitte.com/us/en/pages/about-deloitte/articles/press-releases/deloitte-announces-ai-dossier.html

6. Deloitte. The AI Dossier [Internet, cited 2021 October 7]. Available from: https://www2.deloitte.com/content/dam/Deloitte/us/Documents/deloitte-analytics/us-ai-institute-ai-dossier-full-report.pdf

7. Deloitte. The Life Sciences & Health Care AI Dossier [Internet, cited 2021 October 7]. Available from:https://www2.deloitte.com/content/dam/Deloitte/us/Documents/deloitte-analytics/us-ai-institute-life-sciences-healthcare-dossier.pdf

8. EyeVi Technologies. The use of artificial intelligence (AI) in defect detection of roads infrastructure [Internet]. 2021 May 10 [cited 2021 June 14]. Available from: https://eyevi.tech/news/the-use-of-artificial-intelligence-ai-in-defect-detection-of-roads-infrastructure/

9. EyeVi Technologies. XAIS and EyeVi complete first fully automated survey in London [Internet]. 2021 August 31 [cited 2021 October 7]. Available from: https://eyevi.tech/news/xais-and-eyevi-complete-first-fully-automated-survey-in-london/

10. EyeVi Technologies. Connecting the dots between predictive road maintenance, road safety inspection, and traffic management [Internet]. 2021 June 21 [cited 2021 October 7] Available from: https://eyevi.tech/news/connecting-the-dots-between-predictive-road-maintenance-road-safety-inspection-and-traffic-management/

.png)